ActiveRecord with large result sets - part 2: streaming data

In part 1 of this series I showed how using select_all instead of find(:all) can often speed up slow ActiveRecord queries when you expect a large amount of data, by as much as 30 or 40% with Rails 3. However, often 30-40% faster just isn’t enough. What difference does it make if I have to wait 3 minutes or 5 minutes for Rails to display a web page? Either way I’m going to get frustrated and abandon the web site for something more interesting.

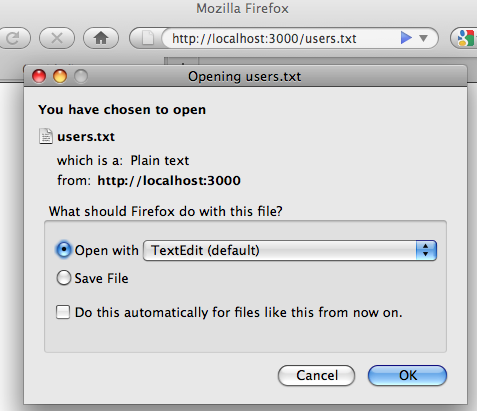

But let’s suppose a Rails web site sets my expectations a little differently. As an example, let’s say that I need to download a report containing the entire list of user accounts from a database table. When I click the “download user report” link, suppose I see this in Firefox:

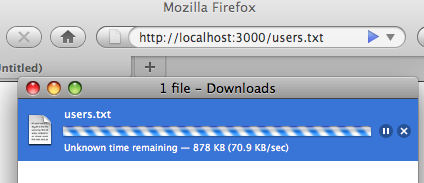

Now instead of waiting to see a web page listing all of the users, I’m waiting to download a large text or spreadsheet file containing the user list. Already, the web site has lowered my performance expectations, since I’ve downloaded large files from the Internet many times before and I know it might take a few minutes to complete. Now when I click OK, Firefox displays this progress bar:

Looking at this progress bar I’m more willing to wait. Besides displaying the animation, Firefox is also telling me how fast the data is being downloaded, and also how much I’ve downloaded in total. Even though it tells me there an “Unknown time remaining” I’m less likely to get impatient since I know data is being steadily downloaded. Finally, when the request is finished I can double click to open the file:

The point here is that web site performance is often more about perception than reality. If it takes 5 minutes for Rails to display a web page no one will wait regardless of how important the data is to them, since in this case the web site seems broken and there’s no indication anything is happening. But if it takes 5 minutes to download a large file many users will happily wait, since they know their data is steadily arriving from the server and that it’s just a matter of time.

How to implement this in your controller

Using the user/name/email example from part 1, here’s the controller code you’ll need to implement this in a Rails 2.x application:

def index respond_to do |format| format.text do headers["Content-Type"] = "text/plain" headers["Content-disposition"] = 'attachment; filename="users.txt"' render :text => proc { |response, output| User.find_each do |u| output.write "#{u.name} #{u.email}\n" end } end end end

It turns out in Rails 3.0 render :text doesn’t support passing a proc, so you’ll need this code instead:

def index respond_to do |format| format.text do headers["Content-Type"] = "text/plain" headers["Content-disposition"] = 'attachment; filename="users.txt"'erroneous_call_to_proc = false self.response_body = proc { |response, output| unless erroneous_call_to_proc User.find_each do |u| output.write "#{u.name} #{u.email}\n" end end erroneous_call_to_proc = true }end end end

In both Rails 2.x and Rails 3, the key here is that instead of using a Rails view to render my page, I provide a proc which is later called with the response object and the output stream. The proc can then repeatedly call output.write to stream the response to the client, one user at a time.

Code walk through

There are a few different things going on in this code snippet, so let’s take them one at a time. First, I indicate to the client that the response is going to be a file, and not a standard web page by setting the “Content-Type” and “Content-Disposition” response headers like this:

headers["Content-Type"] = "text/plain" headers["Content-disposition"] = 'attachment; filename="users.txt"'

Now the browser knows it’s going to receive a text file called “users.txt.” Note that normally in a Rails app you would use send_file or send_data to set these headers, but these functions don’t support streaming data in this manner. (Hmm... sounds like an opportunity for a Rails patch or plugin.) Also, if you knew ahead of time exactly how much data you were going to send to the client, you could also set the “Content-Size” header allowing the browser to display the total download size and correctly calculate the download time remaining.

Next, I call render :text and provide a proc instead of the actual text response. Rails will return from the controller action immediately, but later call the proc when it’s time to generate the response.

render :text => proc { |response, output|As I said earlier, for Rails 3.0 you need to directly set self.response_body, instead of calling render :text.

self.response_body = proc { |response, output|Finally, inside the proc, I have two variables available to me: the response object and also the output stream. By calling output.write, I’m able to send whatever text I would like to the client, which will be immediately streamed to the network. In this example, what I do inside the response proc is call User.find_each to iterate over all of the user records, calling output.write for each one.

User.find_each do |u| output.write "#{u.name} #{u.email}\n" end

Note: right now for Rails 3 it turns out there’s a bug that causes the response proc to be called one extra time. To avoid repeating all of the SQL queries unnecessarily, I’ve added a bit of code to ignore the second call to the proc:

def index respond_to do |format| format.text do headers["Content-Type"] = "text/plain" headers["Content-disposition"] = 'attachment; filename="users.txt"'erroneous_call_to_proc = falseself.response_body = proc { |response, output|unless erroneous_call_to_procUser.find_each do |u| output.write "#{u.name} #{u.email}\n" endend erroneous_call_to_proc = true} end end end

Yes, this is super ugly but for now might be the only option for streaming in Rails 3. Hopefully in an upcoming version of Rails 3.0.x this will be fixed. I might even try to submit a Rails patch myself if I have time.

find_each vs. find: memory usage

Besides using a proc to stream the result, the other important detail here is the use of find_each instead of find :all. Calling find_each allows me to avoid loading the entire data set into memory all at once. If I have 10,000 or 100,000 records in the users table, not only do I have to worry about taking a long time to return all the records in a single HTTP request, but I also have to worry about Rails using up all of the available memory. Calling User.all or User.find :all would return an array 10,000 or 100,000 user model objects, all loaded into memory at one time. If there were many long columns in each user record, this might quickly use up all of the available memory on my server.

However, find_each iterates through the user records in batches, yielding each user record to my block one at a time. By default 1000 user records are loaded at a time, meaning that only 1000 user objects are loaded into memory at any given time, instead of 10,000 or 100,000. See Ryan Daigle’s nice rundown or the Rails documentation for more details on find_each.

If you take a look at your Rails log file, you’ll see how find_each loads the user records in batches of 1000, using the primary key of the last record loaded as a filter for each subsequent query:

User Load (20.6ms) SELECT "users".* FROM "users" WHERE ("users"."id" >= 0) ORDER BY users.id ASC LIMIT 1000

User Load (21.8ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 16632) ORDER BY users.id ASC LIMIT 1000

User Load (31.3ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 17632) ORDER BY users.id ASC LIMIT 1000

User Load (21.2ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 18632) ORDER BY users.id ASC LIMIT 1000

User Load (21.2ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 19632) ORDER BY users.id ASC LIMIT 1000

User Load (21.1ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 20632) ORDER BY users.id ASC LIMIT 1000

User Load (62.5ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 21632) ORDER BY users.id ASC LIMIT 1000

User Load (21.2ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 22632) ORDER BY users.id ASC LIMIT 1000

User Load (22.0ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 23632) ORDER BY users.id ASC LIMIT 1000

User Load (21.7ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 24632) ORDER BY users.id ASC LIMIT 1000

User Load (0.2ms) SELECT "users".* FROM "users" WHERE ("users"."id" > 25632) ORDER BY users.id ASC LIMIT 1000

etc...

Here the last record loaded by the first SQL query had an id of 16632, and find_each added 1000 to that id value each time to obtain the next 1000 users.

Streaming data on your machine with Rails 3 and Mongrel

The other caveat with the streaming code I show above is that it relies on the underlying web server to stream the results properly via Rack to the user or client. Practically speaking, this means that you need to use Apache/Passenger, Mongrel or some other modern Rails stack to stream the response - but not Webrick. If you try the code above with Webrick you’ll have to wait for the entire response to be loaded into memory and downloaded all at once before Firefox can display the file-open dialog box; in other words the data won’t be streamed at all.

To wrap things up for today, let’s build a sample Rails 3 app together right now from scratch that demonstrates file streaming:

$ rails -v

Rails 3.0.0

$ rails new stream_users

create

create README

create Rakefile

create config.ru

create .gitignore

create Gemfile

create app

create app/controllers/application_controller.rb

etc...$ cd stream_users/ $ rails generate scaffold user name:string email:string $ rake db:migrate

And finally I’ll create a large data set in the console... this takes a few minutes for my laptop to complete:

$ rails console Loading development environment (Rails 3.0.0) ruby-1.8.7-p302 > 100000.times do ruby-1.8.7-p302 > User.create :name=>'pat', :email=>'pat@patshaughnessy.net' ruby-1.8.7-p302 ?> end => 10

Now let’s take a look at the index action in the users_controller.rb file created by the scaffolding generator:

def index @users = User.allrespond_to <span class="r">do</span> |format| format.html <span class="c"># index.html.erb</span> format.xml { render <span class="sy">:xml</span> => <span class="iv">@users</span> } <span class="r">end</span>end

As I explained above, this will load all of the user records into memory at one time. By using a GC patched version of the Ruby interpreter, we can see how much memory this will take:

$ rails console Loading development environment (Rails 3.0.0) ruby-1.8.7-p302 > GC.enable_stats => false ruby-1.8.7-p302 > users = User.all; nil => nil ruby-1.8.7-p302 > GC.allocated_size => 129630037

That’s 129MB of data loaded into memory just for this simple “user” example with only two string columns! And I still haven’t tried to call my index.html.erb view file to render the web page.

Let’s repeat the same test using find_each, but I’ll call “break” as soon as my block is called for the first time:

$ rails console Loading development environment (Rails 3.0.0) ruby-1.8.7-p302 > GC.enable_stats => false ruby-1.8.7-p302 > User.find_each do |u| ruby-1.8.7-p302 > break ruby-1.8.7-p302 ?> end => nil ruby-1.8.7-p302 > GC.allocated_size => 2186329

Here I can see that only 2MB of memory is allocated. Of course as Rails iterates over all the user records more and more user objects will be created, but since the response proc streams the data out to the client each time the block is called, the Ruby garbage collector will free memory just as fast as it’s allocated.

Returning to the controller, I’ll paste in the streaming code from above:

def index respond_to do |format| format.text do headers["Content-Type"] = "text/plain" headers["Content-disposition"] = 'attachment; filename="users.txt"' self.response_body = proc { |response, output| User.find_each do |u| output.write "#{u.name} #{u.email}\n" end } end end end

Now let’s try using our new app:

$ rails server mongrel

Exiting

.../rack/handler/mongrel.rb:1:in `require':

no such file to load -- mongrel (LoadError)

.../rack/handler/mongrel.rb:1

.../rack/handler.rb:17:in `const_get'

etc...Oops - it turns out that with Rails 3 you need to explicitly load the mongrel gem in your Gemfile:

source 'http://rubygems.org'gem 'rails', '3.0.0'

gem 'mongrel'# Bundle edge Rails instead:# gem 'rails', :git => 'git://github.com/rails/rails.git'

gem 'sqlite3-ruby', :require => 'sqlite3'

And then use bundler to install it if necessary:

$ bundle install Using rake (0.8.7) Using abstract (1.0.0) Using activesupport (3.0.0) etc...

And now finally I can start up my mongrel server

$ rails server mongrel => Booting Mongrel => Rails 3.0.0 application starting in development on http://0.0.0.0:3000 => Call with -d to detach => Ctrl-C to shutdown server

... and stream all 100,000 user records by opening http://localhost:3000/users.text. For this example, the users.txt file was about 5MB large... certainly there’s no good reason to allocate over 200MB on the server to generate this!